RTX4090 8GPUサーバーの紹介

GeForce RTX 4090はRTX A6000などと比較して、物理的サイズが非常に大きく、かつ消費電力も450Wと大きいため、RTX4090を8GPU搭載可能なサーバーの構築は困難と考えられますが、この記事では弊社が販売しているRTX4090を8GPU搭載可能な、AMD Epyc Genoa 2CPUのサーバーを紹介します。

弊社が販売するRTX4090の物理サイズはRTX A6000などと同じサイズの製品です。そのため、4Uのサーバーに8GPU搭載可能です。外排気の構造になっており、冷却用のFANの排気は、サーバーの背面外部へのみ行い、サーバー内部には排気しないため、サーバー内がGPUの熱で温度上昇することはありません。GPUの補助電源ケーブルは12VHPWR/600Wが8本付属していますので、8GPUまでの電源供給にも問題ありません。このサーバーをカスタマイズして見積もり依頼するには、ここをクリックしてください。

今回はこのサーバーにRTX4090を8GPU,

(base) dl@dl-machine:~$ nvidia-smi

Sat Sep 9 13:59:26 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 4090 On | 00000000:01:00.0 Off | Off |

| 33% 33C P8 12W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce RTX 4090 On | 00000000:21:00.0 Off | Off |

| 34% 34C P8 18W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce RTX 4090 On | 00000000:41:00.0 Off | Off |

| 33% 33C P8 19W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce RTX 4090 On | 00000000:61:00.0 Off | Off |

| 33% 32C P8 11W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 4 NVIDIA GeForce RTX 4090 On | 00000000:81:00.0 Off | Off |

| 34% 32C P8 12W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 5 NVIDIA GeForce RTX 4090 On | 00000000:A1:00.0 Off | Off |

| 34% 33C P8 21W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 6 NVIDIA GeForce RTX 4090 On | 00000000:C1:00.0 Off | Off |

| 34% 31C P8 19W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 7 NVIDIA GeForce RTX 4090 On | 00000000:E1:00.0 Off | Off |

| 34% 30C P8 24W / 450W | 13MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 1 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 2 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 3 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 4 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 5 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 6 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

| 7 N/A N/A 4151 G /usr/lib/xorg/Xorg 4MiB |

+---------------------------------------------------------------------------------------+

(base) dl@dl-machine:~$

CPUは

(base) dl@dl-machine:~/slurm$ lscpu|grep -i "model name" Model name: AMD EPYC 9654 96-Core Processor (base) dl@dl-machine:~/slurm$ nproc 192 (base) dl@dl-machine:~/slurm$

AMD EPYC 9654 96-Coreを2CPUで192コア

メモリは

(base) dl@dl-machine:~/slurm$ sudo dmidecode -t memory | grep '\sVolatile Size'

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

Volatile Size: 64 GB

(base) dl@dl-machine:~/slurm$ sudo dmidecode -t memory | grep '\sVolatile Size'|wc

24 96 528

(base) dl@dl-machine:~/slurm$

64GB DIMMを24枚で1.5TB

SSDは

(base) dl@dl-machine:/etc/slurm-llnl$ sudo lshw -c disk

*-namespace

description: NVMe namespace

physical id: 1

logical name: /dev/nvme0n1

size: 7153GiB (7681GB)

capabilities: gpt-1.00 partitioned partitioned:gpt

configuration: guid=c97becbf-ddf5-4758-81b4-a8a15482b0ce logicalsectorsize=512 sectorsize=4096

(base) dl@dl-machine:~/slurm$

7.68TBのNVMe SSDが1台です。

それではこのサーバーでお手軽にGPUの性能を評価できる、tf_cnn_benchmarksを実行してみましょう。このリンクをcloneしておきます。

tensorflow-1が必要ですがNGCのtensorflow 1をsingularityでpullしておきます。dockerでなくsingularityを使うのは、slurmでジョブを投入するためです。dockerを使用するジョブはslurm(その他の大抵のジョブスケジューラにも)に投入できません。

NVIDIAドライバーは既にインストール済みですが、RTX4090で並列学習をさせるためには、RTX 4090のGPU間Peer Peer accessがNoと表示されるドライバーであることが必要ですので、それを確認します。

(base) dl@dl-machine:/etc/slurm-llnl$ deviceQuery

deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 8 CUDA Capable device(s)

Device 0: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 1: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 33 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 2: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 65 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 3: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 97 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 4: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 129 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 5: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 161 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 6: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 193 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

Device 7: "NVIDIA GeForce RTX 4090"

CUDA Driver Version / Runtime Version 12.2 / 11.1

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 24217 MBytes (25393692672 bytes)

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

MapSMtoCores for SM 8.9 is undefined. Default to use 128 Cores/SM

(128) Multiprocessors, (128) CUDA Cores/MP: 16384 CUDA Cores

GPU Max Clock rate: 2520 MHz (2.52 GHz)

Memory Clock rate: 10501 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 75497472 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 225 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU2) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU3) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU4) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU6) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU5) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU6) -> NVIDIA GeForce RTX 4090 (GPU7) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU0) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU2) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU3) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU4) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU5) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU7) -> NVIDIA GeForce RTX 4090 (GPU6) : No

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 12.2, CUDA Runtime Version = 11.1, NumDevs = 8

Result = PASS

(base) dl@dl-machine:/etc/slurm-llnl$

8GPUが搭載されているため表示が長くなってしまいましたが、最後に表示されるPeer accessの行の右端が全てNoになっているのでこのドライバーで問題ありません。

slurmジョブスケジューラが動作しているかの確認は

(base) dl@dl-machine:~$ sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST debug* up infinite 1 idle dl-machine (base) dl@dl-machine:~$ scontrol show nodes NodeName=dl-machine Arch=x86_64 CoresPerSocket=1 CPUAlloc=0 CPUTot=192 CPULoad=0.72 AvailableFeatures=(null) ActiveFeatures=(null) Gres=gpu:rtx4090:8(S:0-1) NodeAddr=dl-machine NodeHostName=dl-machine Version=19.05.5 OS=Linux 5.15.0-83-generic #92~20.04.1-Ubuntu SMP Mon Aug 21 14:00:49 UTC 2023 RealMemory=1547856 AllocMem=0 FreeMem=1534739 Sockets=192 Boards=1 State=IDLE ThreadsPerCore=1 TmpDisk=0 Weight=1 Owner=N/A MCS_label=N/A Partitions=debug BootTime=2023-09-07T15:27:15 SlurmdStartTime=2023-09-07T16:01:09 CfgTRES=cpu=192,mem=1547856M,billing=192 AllocTRES= CapWatts=n/a CurrentWatts=0 AveWatts=0 ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s (base) dl@dl-machine:~$

で可能です。

slurmにtf cnn benchmarkを網羅的に行うジョブを投入するscriptは

(base) dl@dl-machine:~/slurm$ cat 4090x8.sh

#!/bin/bash

bench=/home/dl/tf_cnn_benchmarks

tf1=/home/dl/singularity/tensorflow_23.02-tf1-py3.sif

logdir=logdir-4090x8

errdir=errdir-4090x8

sbatch_tf_cnn () {

if [ $4 = "fp16" ]; then

acc="--use_fp16"

else

acc=""

fi

rm -rf ${logdir} ${errdir}

mkdir -p ${logdir} ${errdir}

sbatch -J "$3_gpu$1_bs$2_$4" <

になります。

このスクリプトを実行すると網羅的にジョブが投入され

JOBID PARTI NAME USER ST TIME NODES NODELIST(REASON)

6979 debug inception4_gpu1_bs128_fp16 dl PD 0:00 1 (Resources)

6980 debug resnet50_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6981 debug inception3_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6982 debug vgg16_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6983 debug nasnet_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6984 debug resnet152_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6985 debug inception4_gpu1_bs256_fp16 dl PD 0:00 1 (Priority)

6986 debug resnet50_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6987 debug inception3_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6988 debug vgg16_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6989 debug nasnet_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6990 debug resnet152_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6991 debug inception4_gpu1_bs512_fp16 dl PD 0:00 1 (Priority)

6992 debug resnet50_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6993 debug inception3_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6994 debug vgg16_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6995 debug nasnet_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6996 debug resnet152_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6997 debug inception4_gpu2_bs64_fp16 dl PD 0:00 1 (Priority)

6998 debug resnet50_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

6999 debug inception3_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

7000 debug vgg16_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

7001 debug nasnet_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

7002 debug resnet152_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

7003 debug inception4_gpu2_bs128_fp16 dl PD 0:00 1 (Priority)

7004 debug resnet50_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7005 debug inception3_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7006 debug vgg16_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7007 debug nasnet_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7008 debug resnet152_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7009 debug inception4_gpu2_bs256_fp16 dl PD 0:00 1 (Priority)

7010 debug resnet50_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7011 debug inception3_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7012 debug vgg16_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7013 debug nasnet_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7014 debug resnet152_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7015 debug inception4_gpu2_bs512_fp16 dl PD 0:00 1 (Priority)

7016 debug resnet50_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7017 debug inception3_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7018 debug vgg16_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7019 debug nasnet_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7020 debug resnet152_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7021 debug inception4_gpu4_bs64_fp16 dl PD 0:00 1 (Priority)

7022 debug resnet50_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7023 debug inception3_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7024 debug vgg16_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7025 debug nasnet_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7026 debug resnet152_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7027 debug inception4_gpu4_bs128_fp16 dl PD 0:00 1 (Priority)

7028 debug resnet50_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7029 debug inception3_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7030 debug vgg16_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7031 debug nasnet_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7032 debug resnet152_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7033 debug inception4_gpu4_bs256_fp16 dl PD 0:00 1 (Priority)

7034 debug resnet50_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7035 debug inception3_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7036 debug vgg16_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7037 debug nasnet_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7038 debug resnet152_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7039 debug inception4_gpu4_bs512_fp16 dl PD 0:00 1 (Priority)

7040 debug resnet50_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7041 debug inception3_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7042 debug vgg16_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7043 debug nasnet_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7044 debug resnet152_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7045 debug inception4_gpu8_bs64_fp16 dl PD 0:00 1 (Priority)

7046 debug resnet50_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7047 debug inception3_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7048 debug vgg16_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7049 debug nasnet_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7050 debug resnet152_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7051 debug inception4_gpu8_bs128_fp16 dl PD 0:00 1 (Priority)

7052 debug resnet50_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7053 debug inception3_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7054 debug vgg16_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7055 debug nasnet_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7056 debug resnet152_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7057 debug inception4_gpu8_bs256_fp16 dl PD 0:00 1 (Priority)

7058 debug resnet50_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7059 debug inception3_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7060 debug vgg16_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7061 debug nasnet_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7062 debug resnet152_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7063 debug inception4_gpu8_bs512_fp16 dl PD 0:00 1 (Priority)

7064 debug resnet50_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7065 debug inception3_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7066 debug vgg16_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7067 debug nasnet_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7068 debug resnet152_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7069 debug inception4_gpu1_bs64_fp32 dl PD 0:00 1 (Priority)

7070 debug resnet50_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7071 debug inception3_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7072 debug vgg16_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7073 debug nasnet_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7074 debug resnet152_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7075 debug inception4_gpu1_bs128_fp32 dl PD 0:00 1 (Priority)

7076 debug resnet50_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7077 debug inception3_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7078 debug vgg16_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7079 debug nasnet_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7080 debug resnet152_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7081 debug inception4_gpu1_bs256_fp32 dl PD 0:00 1 (Priority)

7082 debug resnet50_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7083 debug inception3_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7084 debug vgg16_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7085 debug nasnet_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7086 debug resnet152_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7087 debug inception4_gpu1_bs512_fp32 dl PD 0:00 1 (Priority)

7088 debug resnet50_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7089 debug inception3_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7090 debug vgg16_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7091 debug nasnet_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7092 debug resnet152_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7093 debug inception4_gpu2_bs64_fp32 dl PD 0:00 1 (Priority)

7094 debug resnet50_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7095 debug inception3_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7096 debug vgg16_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7097 debug nasnet_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7098 debug resnet152_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7099 debug inception4_gpu2_bs128_fp32 dl PD 0:00 1 (Priority)

7100 debug resnet50_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7101 debug inception3_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7102 debug vgg16_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7103 debug nasnet_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7104 debug resnet152_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7105 debug inception4_gpu2_bs256_fp32 dl PD 0:00 1 (Priority)

7106 debug resnet50_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7107 debug inception3_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7108 debug vgg16_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7109 debug nasnet_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7110 debug resnet152_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7111 debug inception4_gpu2_bs512_fp32 dl PD 0:00 1 (Priority)

7112 debug resnet50_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7113 debug inception3_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7114 debug vgg16_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7115 debug nasnet_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7116 debug resnet152_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7117 debug inception4_gpu4_bs64_fp32 dl PD 0:00 1 (Priority)

7118 debug resnet50_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7119 debug inception3_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7120 debug vgg16_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7121 debug nasnet_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7122 debug resnet152_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7123 debug inception4_gpu4_bs128_fp32 dl PD 0:00 1 (Priority)

7124 debug resnet50_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7125 debug inception3_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7126 debug vgg16_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7127 debug nasnet_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7128 debug resnet152_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7129 debug inception4_gpu4_bs256_fp32 dl PD 0:00 1 (Priority)

7130 debug resnet50_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7131 debug inception3_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7132 debug vgg16_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7133 debug nasnet_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7134 debug resnet152_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7135 debug inception4_gpu4_bs512_fp32 dl PD 0:00 1 (Priority)

7136 debug resnet50_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7137 debug inception3_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7138 debug vgg16_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7139 debug nasnet_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7140 debug resnet152_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7141 debug inception4_gpu8_bs64_fp32 dl PD 0:00 1 (Priority)

7142 debug resnet50_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7143 debug inception3_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7144 debug vgg16_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7145 debug nasnet_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7146 debug resnet152_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7147 debug inception4_gpu8_bs128_fp32 dl PD 0:00 1 (Priority)

7148 debug resnet50_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7149 debug inception3_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7150 debug vgg16_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7151 debug nasnet_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7152 debug resnet152_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7153 debug inception4_gpu8_bs256_fp32 dl PD 0:00 1 (Priority)

7154 debug resnet50_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

7155 debug inception3_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

7156 debug vgg16_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

7157 debug nasnet_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

7158 debug resnet152_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

7159 debug inception4_gpu8_bs512_fp32 dl PD 0:00 1 (Priority)

6968 debug resnet50_gpu1_bs64_fp16 dl R 0:12 1 dl-machine

6970 debug vgg16_gpu1_bs64_fp16 dl R 0:12 1 dl-machine

6971 debug nasnet_gpu1_bs64_fp16 dl R 0:12 1 dl-machine

6972 debug resnet152_gpu1_bs64_fp16 dl R 0:12 1 dl-machine

6975 debug inception3_gpu1_bs128_fp16 dl R 0:12 1 dl-machine

6976 debug vgg16_gpu1_bs128_fp16 dl R 0:12 1 dl-machine

6977 debug nasnet_gpu1_bs128_fp16 dl R 0:12 1 dl-machine

6978 debug resnet152_gpu1_bs128_fp16 dl R 0:12 1 dl-machine

(base) dl@dl-machine:~/slurm$

のように実行を始めます。

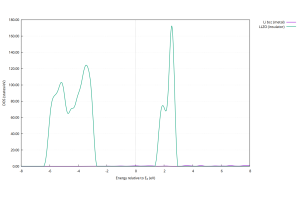

実行結果は

(base) dl@dl-machine:~/slurm$ ls -lt logdir-4090x8 total 136 -rw-rw-r-- 1 dl dl 11 9月 9 16:32 nasnet_gpux2_bs256_fp16.log -rw-rw-r-- 1 dl dl 1171 9月 9 16:32 inception3_gpux2_bs256_fp16.log -rw-rw-r-- 1 dl dl 11 9月 9 16:31 vgg16_gpux2_bs256_fp16.log -rw-rw-r-- 1 dl dl 1159 9月 9 16:31 inception4_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1167 9月 9 16:31 resnet50_gpux2_bs256_fp16.log -rw-rw-r-- 1 dl dl 1168 9月 9 16:30 resnet152_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1165 9月 9 16:30 nasnet_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1159 9月 9 16:29 inception3_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1134 9月 9 16:29 resnet152_gpux1_bs64_fp32.log -rw-rw-r-- 1 dl dl 11 9月 9 16:28 vgg16_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1169 9月 9 16:28 resnet50_gpux2_bs128_fp16.log -rw-rw-r-- 1 dl dl 1159 9月 9 16:28 inception4_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1138 9月 9 16:28 nasnet_gpux1_bs64_fp32.log -rw-rw-r-- 1 dl dl 1158 9月 9 16:28 vgg16_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1130 9月 9 16:27 vgg16_gpux1_bs64_fp32.log -rw-rw-r-- 1 dl dl 1165 9月 9 16:27 resnet152_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1165 9月 9 16:26 nasnet_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1135 9月 9 16:26 inception3_gpux1_bs64_fp32.log -rw-rw-r-- 1 dl dl 1168 9月 9 16:26 inception3_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1133 9月 9 16:25 resnet50_gpux1_bs64_fp32.log -rw-rw-r-- 1 dl dl 1169 9月 9 16:25 resnet50_gpux2_bs64_fp16.log -rw-rw-r-- 1 dl dl 1147 9月 9 16:25 resnet50_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 391 9月 9 16:25 nasnet_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 1137 9月 9 16:25 inception4_gpux1_bs256_fp16.log -rw-rw-r-- 1 dl dl 395 9月 9 16:25 inception4_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 394 9月 9 16:25 resnet152_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 1136 9月 9 16:24 resnet152_gpux1_bs256_fp16.log -rw-rw-r-- 1 dl dl 395 9月 9 16:24 inception3_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 11 9月 9 16:23 vgg16_gpux1_bs512_fp16.log -rw-rw-r-- 1 dl dl 1149 9月 9 16:23 inception3_gpux1_bs256_fp16.log -rw-rw-r-- 1 dl dl 1133 9月 9 16:23 nasnet_gpux1_bs256_fp16.log -rw-rw-r-- 1 dl dl 1147 9月 9 16:23 resnet50_gpux1_bs256_fp16.log -rw-rw-r-- 1 dl dl 1137 9月 9 16:23 inception4_gpux1_bs128_fp16.log -rw-rw-r-- 1 dl dl 11 9月 9 16:22 vgg16_gpux1_bs256_fp16.log (base) dl@dl-machine:~/slurm$

のようにファイルに書き込まれます。中は

(base) dl@dl-machine:~/slurm$ cat logdir-4090x8/inception4_gpux1_bs128_fp16.log

dl-machine

TensorFlow: 1.15

Model: inception4

Dataset: imagenet (synthetic)

Mode: training

SingleSess: False

Batch size: 128 global

128 per device

Num batches: 100

Num epochs: 0.01

Devices: ['/gpu:0']

NUMA bind: False

Data format: NCHW

Optimizer: sgd

Variables: parameter_server

==========

Generating training model

Initializing graph

Running warm up

Done warm up

Step Img/sec total_loss

1 images/sec: 693.9 +/- 0.0 (jitter = 0.0) 7.725

10 images/sec: 694.9 +/- 1.3 (jitter = 1.3) 7.662

20 images/sec: 696.2 +/- 1.0 (jitter = 1.8) 7.695

30 images/sec: 696.2 +/- 0.8 (jitter = 1.8) 7.589

40 images/sec: 695.6 +/- 0.6 (jitter = 1.4) 7.618

50 images/sec: 695.4 +/- 0.5 (jitter = 1.0) 7.659

60 images/sec: 695.0 +/- 0.5 (jitter = 0.7) 7.555

70 images/sec: 694.9 +/- 0.4 (jitter = 0.7) 7.638

80 images/sec: 694.6 +/- 0.5 (jitter = 0.7) 7.656

90 images/sec: 694.4 +/- 0.5 (jitter = 0.8) 7.696

100 images/sec: 694.4 +/- 0.4 (jitter = 0.8) 7.634

----------------------------------------------------------------

total images/sec: 694.06

----------------------------------------------------------------

(base) dl@dl-machine:~/slurm$

のようになっています。